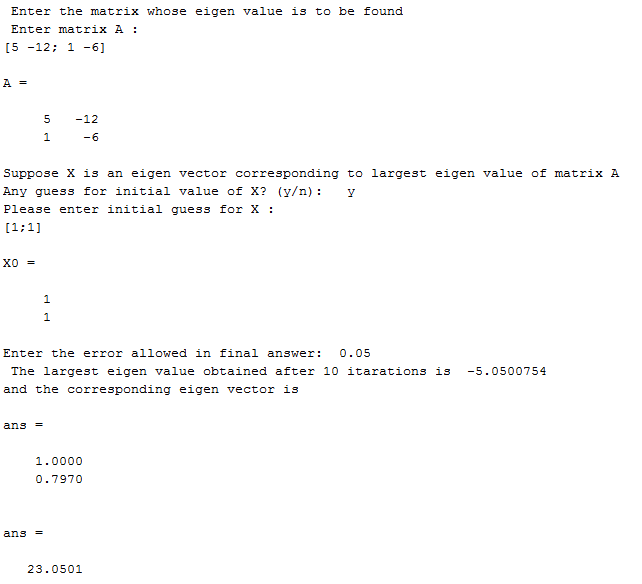

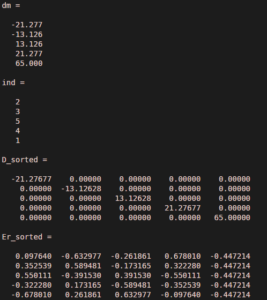

(1933) Analysis of a complex of statistical variables into principal components. (1901) On Lines and Planes of Closest Fit to Systems of Points in Space. A second post explained the use of the principal component analysis (PCA) to decipher the statistically independent contribution of the source rocks to the sediment compositions in the Santa Maria Basin, NW Argentine Andes. Now you are ready for a cup of good south Ethiopian coffee!Īn earlier post to this blog demonstrated linear unmixing variables using the PCA with MATLAB. We get same values for newdata and variance. figureĭo the same experiment using the MATLAB function pca. var(newdata)Īlternatively we get the variances by normalizing the eigenvalues: variance = D / sum(D(:))Īnd therefore again 99.57% and 0.43% of the variance for the two principal components. Display the newdata together with the new coordinate system. In our example, the 1st and 2nd principal component contains 99.57 and 0.43 percent of the total variance in data. We can use this information to calculate the relative variance for each new variable by dividing the variances according to the eigenvectors by the sum of the variances. The eigenvalues of the covariance matrix (the diagonals of the diagonalized covariance matrix) indicate the variance in this (new) coordinate direction. We need to flip newdata left/right since the second column is the one with the largest eigenvalue. norm(V(:,1))Ĭalculating the data set in the new coordinate system. The eigenvectors are unit vectors and orthogonal, therefore the norm is one and the inner (scalar, dot) product is zero.

Therefore we need to change the order of the new variables in newdata after the transformation. Display the data together with the eigenvectors representing the new coordinate system. = eig(C)Īs you can see the second eigenvalue of 4.1266 is larger than the first eigenvalue of 0.0177. We now calculate the eigenvectors V and eigenvalues D of the covariance matrix C.

the diagonalized version of C, gives the variance within the new coordinate axes, i.e. The eigenvalues D of the covariance matrix, i.e. We find the eigenvalues of the covariance matrix C by solving the equation The eigenvectors V belonging to the diagonalized covariance matrix are a linear combination of the old base vectors, thus expressing the correlation between the old and the new time series. The decorrelation is achieved by diagonalizing the covariance matrix C. the covariance is zero for all pairs of the new variables. The rotation helps to create new variables which are uncorrelated, i.e. The covariance matrix contains all necessary information to rotate the coordinate system. Next we calculate the covariance matrix of data. In the following steps we therefore study the deviations from the mean(s) only. subtract the univariate means from the two columns, i.e. Step 1įirst we have to mean center the data, i.e. Where E is the identity matrix, which is a classic eigenvalue problem: it is a linear system of equations with the eigenvectors V as the solution. The solution of this problem is to calculate the largest eigenvalue D of the covariance matrix C and the corresponding eigenvector V The task is to find the unit vector pointing into the direction with the largest variance within the bivariate data set data. As an example we are creating a bivariate data set of two vectors, 30 data points each, with a strong linear correlation, overlain by normally distributed noise. Here is a n=2 dimensional example to perform a PCA without the use of the MATLAB function pca, but with the function of eig for the calculation of eigenvectors and eigenvalues.Īssume a data set that consists of measurements of p variables on n samples, stored in an n-by-p array. To understand the method, it is helpful to know something about matrix algebra, eigenvectors, and eigenvalues. The eigenvalues represent the distribution of the variance among each of the eigenvectors. The Principal Component Analysis (PCA) is equivalent to fitting an n-dimensional ellipsoid to the data, where the eigenvectors of the covariance matrix of the data set are the axes of the ellipsoid.

0 kommentar(er)

0 kommentar(er)